Statistical Data Cleaning for Deep Learning of Automation Tasks from Demonstrations

Project maintained by CalCharles Hosted on GitHub Pages — Theme by mattgraham

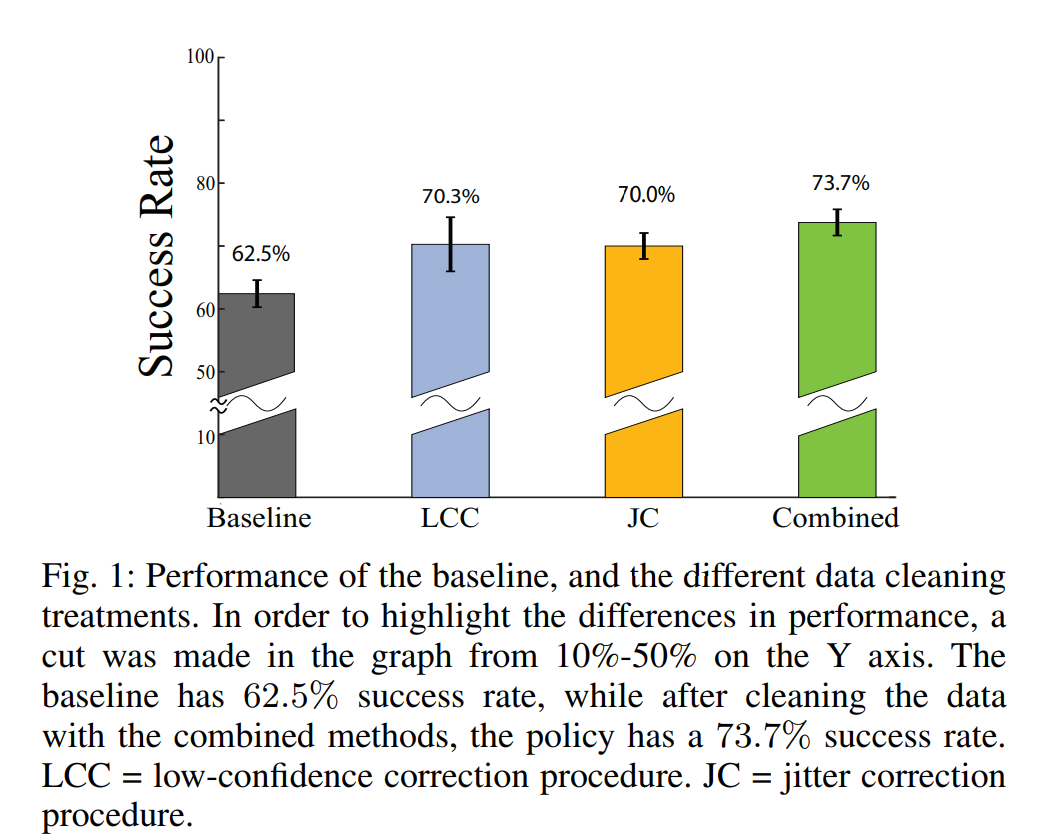

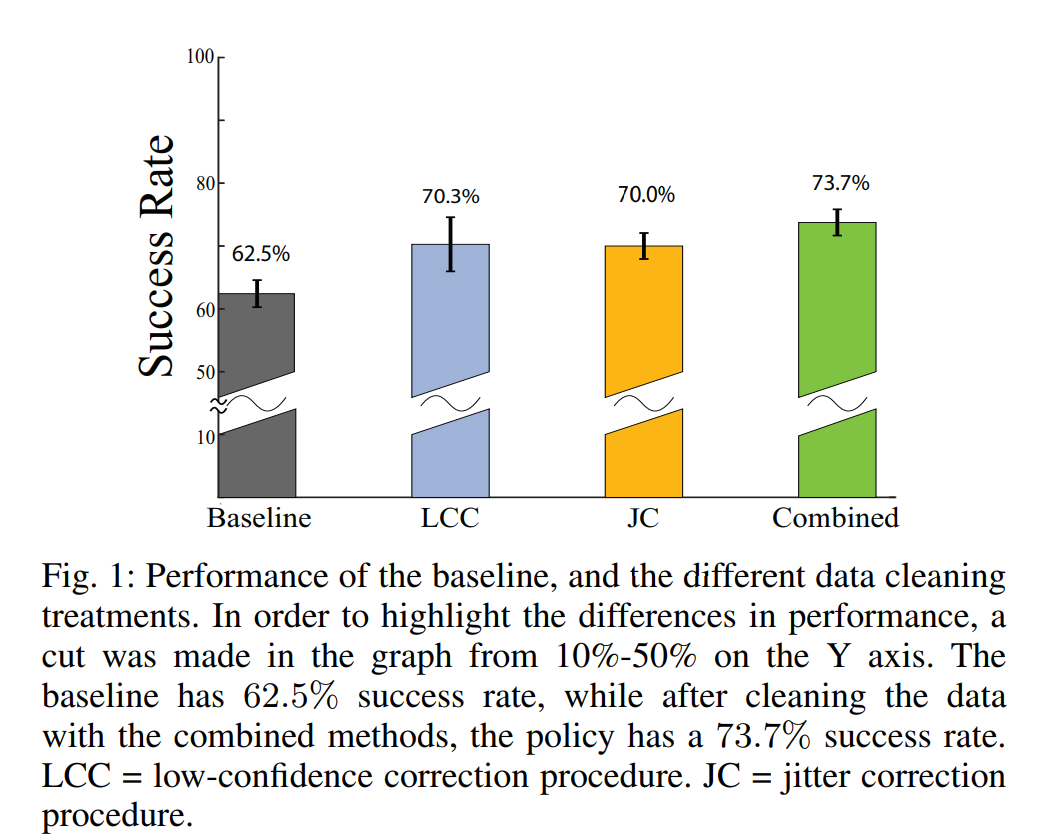

Plot of improvement results for different treatments

Abstract

Automation using deep learning from demonstrations requires many training examples. Gathering this data is time consuming and expensive, and human demonstrators are prone to inconsistencies and errors that can delay or degrade learning. This paper explores how characterizing supervisor inconsistency and correcting for this noise can improve task performance with a limited budget of data. We consider a planar part extraction task (separating one part from a group) where human operators provide demonstrations by teleoperating a 2DOF robot. We analyze 30,000 image-control pairs from 480 trajectories. After error corrections, trained CNN models show an improvement of 11.2% upon the baseline in mean absolute success rate.

Final Paper submitted to IEEE CASE 2017

Authors and Contributions

Caleb Chuck, Michael Laskey, Sanjay Krishnan, Ruta Joshi, Roy Fox, Ken Goldberg

Please contact Caleb Chuck (caleb_chuck AT yahoo DOT com) for further information.